ChatGPT

Last updated January 19, 2024

Disclaimer: this is a living document, meant as an FAQ. Nothing on this page is an official rule or regulation of ETH Zurich.

GPT (Generative Pre-trained Transformer) is a neural network that generates plausible fiction. The "generative" algorithm works like the autocomplete function for text messages on smartphones, which suggests likely next words to the user - when typing “I will arrive” the phone might suggest “at”, “in”, and “tomorrow.” As an example, always clicking on the first suggested word would generate the following sentence: “I will arrive at home office in about an hour to get to the university,” which is almost grammatically correct, but non-sensical. While autocomplete works with words, GPT works with tokens (similar but not identical to syllables); it was trained on a huge corpus of text including scientific literature, and the size of its neural network is several orders of magnitude larger than what is behind autocomplete. Generative neural-network algorithms are only one particular approach to Artificial Intelligence - there are several other promising approaches. The result of GPT is highly plausible fiction which oftentimes happens to be factually correct.

ChatGPT is an interface to GPT which allows for multilingual prompting by issuing commands and questions. The system can be accessed at external pagehttps://chat.openai.com/call_made.

The best way to learn about ChatGPT’s capabilities is to simply play with it at external pagehttps://chat.openai.com/call_made. The tool can for example write essays, presentations, poems, and program code. It can summarize and translate text, and it can also solve, grade, and construct homework and exam problems. It can also recognize elements in images, recognize handwriting, and generate new images and figures.

The system is not "smart" in a human sense, but it has been shown to perform well on a number of standardized tests. OpenAI has compiled some results for its subscriber version GPT-4 at external pagehttps://openai.com/research/gpt-4call_made, which appear to be consistent with independent research and experiences.

GPT-3.5, the currently freely available version, has problems with basic symbolic and numerical math, which hampers its performance on the assessment components of traditional science courses. Still, even with GPT-3.5, it has been shown that the system would pass the assessment components of some undergraduate science courses, e.g., the non-major introductory physics courses of American colleges - external pagebarely scraping by, but it would pass.call_made

Anecdotal evidence with GPT-4, the version available to subscribers ($20/month), shows that this situation has partly been remedied, and the system is estimated to achive a score of about 80% on the assessment components of an introductory physics course.

While the autocomplete on smartphones permanently "learns" from the user's input, GPT does not permanently learn new knowledge anymore: the “P” in GPT stands for "pre-trained", and for the current version GPT-4, that pre-training ended in April 2023. When being prompted for something that may have happened later, the system uses the Bing search engine to possibly locate newer information from what is available on the web.

However, the system learns within the confines of particular dialogues, where it is possible to refer to statements or conclusions that occured earlier within that dialogue.

The company behind ChatGPT, OpenAI, continues to fine-tune the system, partly based on the thumbs up/down ratings that users can provide, but this is not new knowledge.

We don't know. There certainly were scientific textbooks in its corpus, news, history, scholarly work, and wide sections of the Web - all before April 2023, its "knowledge cutoff". The "we don't know" and the fact that OpenAI likely won't tell pretty much guarantees that ChatGPT won't produce truly scholarly work. Instead, when prompted on any topic, it will give an excellent overview of what is out there: the good, the bad, and the ugly, in its typical list style. This can provide an extremely helpful start into essays, presentations, and papers, since likely it will come up with aspects and topics that the human author might not have considered, but it is still left to the author to sort out and verify the results.

No. For students wanting to cheat on STEM homework, it would still be a much more efficient and save choice to copy from fellow students or use some of the online services like external pageCheggcall_made.

For any kind of scientific writing, ChatGPT can provide a good start to get over writer's block, but students will still need to work on securely anchoring their work in literature, etc. - ChatGPT is remarkable, but at its very core, still produces nothing but plausible fiction.

Strictly, technically, and in legal terms: no. Plagiarism is uncredited copying or use of somebody else's intellectual property; unless personhood is assigned to Artificial Intelligence, using this tool is technically no more plagiarism than doing a Google Scholar search, using Grammarly or DeepL, or using R or Python to carry out large calculations. While computational libraries are frequently cited to give professional credit to the authors, generally none of these other tools are expected to receive credit - they are simply tools-of-the-trade. One might also argue that using ChatGPT is ghostwriting.

But, and this is a very important "but": simply copying ChatGPT's output and submitting it as own work is unacceptable! The legal definition of plagiarism was established before AI-tools became available. As a community, we have to respect and enforce the concept of what it means to be one's own work - users have to make the output of any AI-tool truly their own work before they can call it that.

Students will always need to carefully work with the output of ChatGPT, last-but-not-least because it could contain all kinds of factual errors. ChatGPT is also unable to provide any sources for statements - when asked to provide references, it produces plausible-sounding citations that are in fact complete nonsense. Maybe, to some small degree, the concern about students writing scientific texts with ChatGPT is also less a matter of plagiarism, but a worry about the embarrassment ensuing when embedded nonsense goes undetected during grading.

Of course, ChatGPT can be abused to commit undectable plagiarism: copy/pasting a passage of a carefully researched paper into ChatGPT and asking the system for a summary or paraphrase will very likely result in an equally factual text that is undetectable by plagiarism tools.

A more thorough discussion can be found on our page about AI and academic integrity.

No, not reliably. ChatGPT responses are the result of a probabilistic algorithm and thus generally not reproducible - any two students using ChatGPT will turn in different results. Responses are not simply fragments of the text corpus that was used for training, and thus, response passages cannot be found with Google; ChatGPT does not plagiarize. There are tools that might detect ChatGPT, such as ZeroGPT and possibly TurnItIn, however, that should not be relied upon, and they will always be behind the curve in the rapidly developing field of AI.

On the long run, any effort to fight ChatGPT or similar AI-tools will likely be a futile arms race. Instead, the real question should be: what is the point of fighting such tools?

A more thorough discussion can be found on our page about AI and academic integrity.

First of all, there is every indication that the majority of our students are honest. Forbidding the use of undectable tools on unsupervised assignments or demanding some sort of honor code likely ends up punishing the honest students; students are more aware of other students breaking rules than faculty are, and it creates moral dilemmas for them.

Some ideas can be found on our page about AI and academic integrity.

In faculty meetings these days, one frequently hears exasperated statements like "so, should we just give up?!" - of course, faculty are thinking about cheating, but also on a more fundamental level: are our curricula obsolete?

Currently, the capabilities of ChatGPT might appear to threaten academic integrity and good scientific practice, and they might appear to dig around the foundations of our curricula, but in a way, the discussion is similar to the extensive and hotly contested discussions about using pocket calculators in schools and for exams in the 1970s - eventually, pocket calculators were understood as what they really are: tools.

Yes, ChatGPT threatens certain assessment types. The typical, simple take-home essay is probably a thing of the past; while instructors used to protect those essays against plagiarism by coming up with creative new prompts, ChatGPT will respond to almost any prompt with plausible responses in the desired length and style. Even the "knowledge cutoff" of April 2023 is not a hurdle, since learners could also feed appropriate news articles into the dialogue.

But even that is nothing new: external pageDeepLcall_made, a tool that many of us use regularly (including professionally!), essentially made simple take-home translation assignments obsolete.

ChatGPT is a great way of getting over writer's block when creating essays and presentations, just like DeepL is a great way to get started with a translation. But just like with computer-generated translations, computer-generated essays and presentations will need further work to be acceptable to anything but cursory grading. When prompted about its weaknesses, ChatGPT "admits" that it can become very verbose and fall short on being factual. Our students need to learn unbiased, concise, precise, and factually correct communication. In a way, ChatGPT simply raised the bar on certain kinds of assignments and their grading.

Here are some ideas of how to use ChatGPT in homework or project work for STEM disciplines:

- Evaluating the output of AI-tools will likely become an important skill in the future. Instead of making students solve a problem, make them pose it to ChatGPT, turn in the response they got and their evaluation of it: is it correct? if not, where did it go wrong, and how? The assignment is graded based on the students’ answers to these questions.

- Assign order-of-magnitude or so-called Fermi questions. Anecdotal evidence hints that some of the classic questions may have been part of the training corpus of ChatGPT, but that it generally fails to satisfactorily answer those even when explicitly prompted for nothing but estimates. Future scientists and engineers should be proficient in exactly these kinds of problems, as they may at times be more relevant for making decisions and reasoning in real life than exact numerical answers.

- Ask students to have ChatGPT construct problems. These problems tend to look reasonable, but some of them will not make sense. An instructional scenario would be to have students go through the generated problems and explain why they are solvable or not.

- Let ChatGPT formulate common misconceptions to a topic. Use these as distractors in Multiple Choice items. Let ChatGPT generate answers to a question of a Mulitple Choice item. If they miss the mark, use them as distractors.

- Ask students to formulate questions relating to the course topics that ChatGPT will answer correctly/well. Ask students to formulate questions relating to the course topics that ChatGPT will answer incorrectly or fail to answer well.

Here are some ideas of how to design homework or projects where using ChatGPT will only be of limited help:

- Ask students to reflect on what happened in the course today (or last week), or about their personal experiences with a field trip or a project. Not only is this kind of reflection helpful for learning, it also is an experience that ChatGPT simply would not have had.

- Have students draw solutions, for example “Draw the acceleration graph of a car that stops in front of and then drives off from a stop light” or “Draw a circuit diagram for three light bulbs and a battery. Two light bulbs A and B should be equally bright, and one light bulb C should be brighter.” GPT-4 will likely generate a beautiful-looking image like the one on top of this page, but no circuit diagram.

- Have students calculate or estimate physical quantities based on their own measurements as homework. Towards that end, smartphones with all their sensors are useful for collecting data in real-life situations. Students can use any tools of their choice to analyze and summarize these data.

Overall, though, one needs to be careful not to construct assignments based on what AI currently cannot do. This may change tomorrow. Make assignments that draw on human experiences and creativity.

Not so fast, no. ChatGPT is an ETH-external cloud service which requires identifiable information to sign up. It is thus subject to privacy and data security regulations when used in teaching and learning. At this point, it appears unlikely that it will make the list of protected pagereleased serviceslock, as entered data will be used for development purposes in ways unlikely to be disclosed. You cannot force any member of the ETH community to use this service, usage has to be remain voluntary.

No, not saying that. But be aware that ChatGPT, or, for that matter, any AI-tool, is in rapid development toward a partially unknown future. Further development of GPT will take advantage of user interactions with ChatGPT (a "non-API service"); external pagethe company is open about thatcall_made. While ChatGPT does not learn from these interactions in unsupervised ways (e.g., one dialogue is very unlikely to leak into another dialogue), it seems wise to not disclose any private, confidential, or otherwise identifiable information in ChatGPT dialogues.

No, of course not! There always will be fundamental tasks that students need to be able to carry out without any outside assistance, neither human nor artificial. One cannot look up everything all the time, and certain conceptual understanding and knowledge is simply required for future courses and employment. Those need to be tested in a supervised, restricted environment.

Also important with regards to allowing or not allowing tools are the learning objectives, context matters: when learning the fundamentals of addition and multiplication in elementary school, even a pocket calculator is probably not a helpful tool; when learning about analyzing Big Data, all those tasks would naturally and without a second thought be delegated to a computer.

It is important to note that here, ChatGPT is not the end of all problems. To even access ChatGPT, students need access to the internet - and with the internet come all kinds of other, more efficient cheating options. One needs to be very careful to not set rules that cannot be enforced.

As with any other technological resource, examiners should ask themselves: Do I want to allow the use of ChatGPT (or any other resources) when working on assessment tasks - or not?

- Assessments where ChatGPT is not allowed: For paper-based examinations, proceed as you would have before ChatGPT. For computer-based examinations, ETH offers a service for secure On Campus Online Examinations. However, make sure that such limitations are common-sense, and that it is obvious to learners why such restrictions are justified by the subject-matter - you want to avoid the impression that restrictions are merely a matter of convenience or inertia on the part of the faculty.

- Assessments where ChatGPT is expressly allowed: We can expect that many disciplines will rapidly integrate ChatGPT and similar technologies into their practices and workflows. This may create a demand for appropriately aligned examinations, where students also have access to ChatGPT, while other resources, such as e.g. messenger apps or chat forums, remain effectively blocked. Such examination scenarios are already routinely conducted with ETH’s infrastructure for On Campus Online Examinations - though not yet with ChatGPT, but instead with other web-based resources.

Furthermore, there may exist a demand for two- or multi-phase examinations, where students first solve tasks without access to ChatGPT and then solve other tasks while being granted access. ETH’s Online Examinations service also enables such multi-phase scenarios.

On the long run, ETH's Bring-Your-Own-Device initiative will bring additional flexibility, particularly when used when external pageSafe-Exam-Browsercall_made and other technologies currently in development, such as controlled WLAN subnets. However, like with any other exam, make sure that there are no restrictions that cannot be enforced, as that punishes the honest students.

Other possibilities are opened by oral exams, during which the examiner could have one or more students do some problems with and some problems without external resources. Oral exams are not only a way to provide supervision against cheating, but also a way to better probe students’ competencies. Oral examinations can focus on the interactive and dynamic process rather some static work result.

Yes, many new opportunities open up. For example, at ETH, we frequently use LaTeX, and it seems like GPT can provide external pageavenues for making these documents accessiblecall_made.

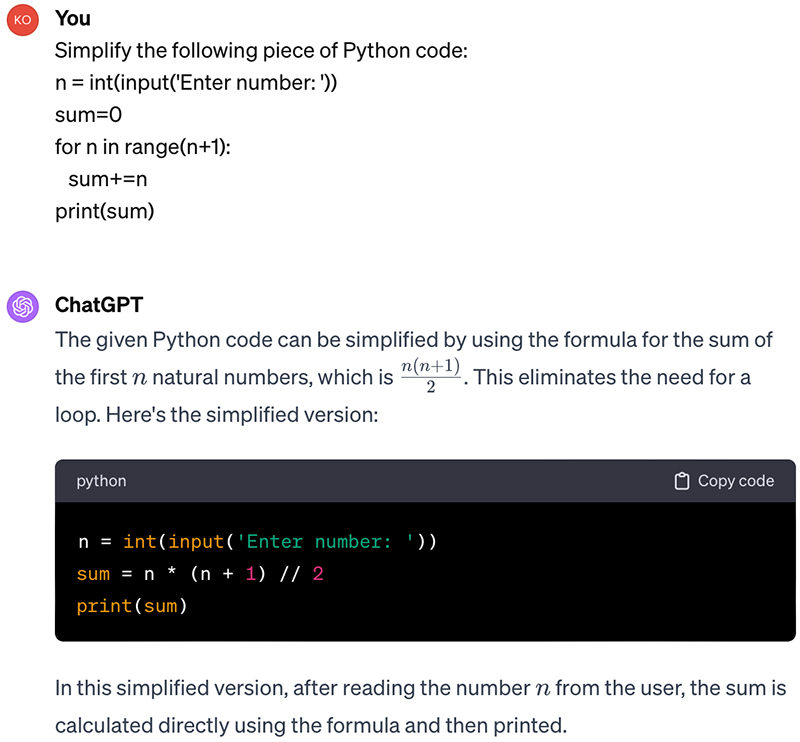

Oh yes, it can. For example, ChatGPT does amazingly well on small programming assignments in Python (including VPython) and R. It translates problem descriptions into code, and it also fixes bugs when prompted. We still need to learn what that means for typical Jupyter Notebook assignments.

One answer might be to give more open-ended problem descriptions or simply scenarios, which the learner still has to translate into more concrete prompts that ChatGPT can translate into code. Computational competencies for non-Computer-Science majors would thus start with concepts of computability, conceptualization, and formulation of the problem that needs solving.

ChatGPT can produce plausible-sounding grades and grade justifications on given rubrics and grading scales. However, anecdotal evidence shows that these grades and justifications would hardly be defensible for high-stakes exams - at least not for now.

However: GPT could soon be used for grading formative assessment, and it certainly shows promise. An external pageexploratory studycall_made found an R2 of 0.8 between human and machine grading.

A sadly entertaining thought would be to use ChatGPT to grade papers written by ChatGPT, which would establish a fully self-sufficient parallel world of spurious scientific discourse.

In any case, at ETH Zurich, it is not permitted to completely automatically assign grades; AI could assist in giving points, but the grade always has to be assigned by a human.

ChatGPT probably won't do any more damage than TikTok already did. More seriously: students come to us to learn. They would not be here if they would not value critical thinking, creativity, independent thought, and pushing the envelope on science, math, and technology. It is unlikely that as a learning community we would deprive ourselves of the joy of figuring things out.

On the other hand, there is certainly a danger in over-reliance on technology. When coming to an unknown city, today most of us just rely on the directions from our smartphones. That works well, mostly, unless the GPS-signal gets reflected from buildings or somehow the phone's map is turned 180 degrees with respect to reality. Walking in circles for miles, one can bemoan the loss of any intuitive sense of direction and skill of dead reckoning. New scientific territory is like an unknown city and this is getting way too philosophical.

Probably not about ChatGPT in particular, as Artificial Intelligence tools will proliferate and become ubiquitous. For example, Microsoft plans to embed an AI "Copilot" into every of its Office products, and Google has come out with its own systems Bard and Gemini. Any rapidly written overly specific or restrictive rules and regulations will run the danger of sounding like blind actionism, and apparent quick fixes might sound ridiculous a few months from now.

We also need to be careful about restricting tools where we cannot justify it. It makes sense to forbid the use of computers in chess tournaments, since computers could beat humans virtually anytime, which destroys the enjoyment of a wonderful game - this is similar to forbidding doping or "performance-enhancing drugs" in sports. Science, math and engineering never worked that way; we always worked with the best available tools, and in fact were expected to do so to stay competitive.

Having said that, simply copy/pasting the output of an AI-tool and submitting it as one's own work is unacceptable.

Disclaimer: nothing in this FAQ is an official rule or regulation of ETH Zurich!

Comments, suggestions, etc.: Gerd Kortemeyer,