3D mapping of entire buildings with mobile devices

Computer scientists working in a group led by ETH Professor Marc Pollefeys have developed a piece of software that makes it very easy to create 3D models of entire buildings. Running on a new type of tablet computer, the program generates 3D maps in real time.

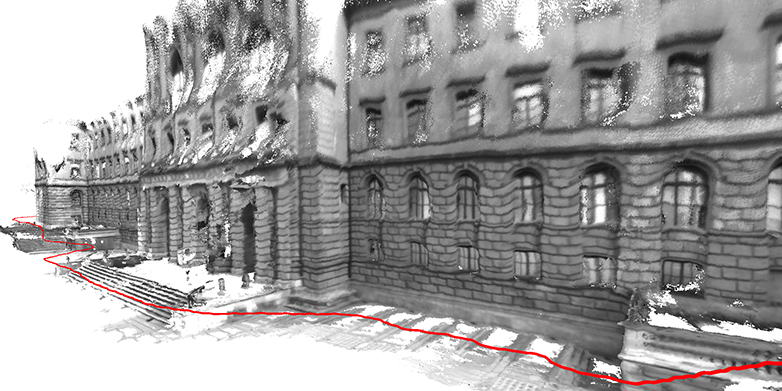

When Thomas Schöps wants to create a three-dimensional model of the ETH Zurich main building, he pulls out his tablet computer. As he completes a leisurely walk around the structure, he keeps the device’s rear-facing camera pointing at the building’s façade. Bit by bit, an impressive 3D model of the edifice appears on the screen. It takes Schöps, a doctoral student at the Institute for Visual Computing, just 10 minutes to digitise a historical structure such as the main building.

He developed the software running on the device in cooperation with his colleagues from the group led by Marc Pollefeys, Professor of Informatics. Development was carried out as part of Google’s Project Tango, in which the internet company is collaborating with 40 universities and companies. ETH Zurich is one of them.

Pixel comparison

The ETH scientists’ method works by purely optical means. It is based on comparing multiple images, which are taken on the tablet by a camera with a fisheye lens, and uses the principle of triangulation in a manner similar to that applied in geodetic surveying. Or, to put it simply: the software analyses two images of a building’s façade that were taken from different positions. For each piece of image information, each pixel in an image, it searches for the corresponding element in the other. From these two points and from the camera’s known position and viewing angle, the software can determine how far each picture element is from the device and can use this information to generate a 3D model of the object. Long gone are the days when the models were restricted to the outlines of buildings and basic features such as window openings and doorways. Instead, they now even show architectural details such as the arrangement of bricks in a stone façade.

The new software offers some key advantages over existing methods. One advantage is that it can be used in sunlight. “Other systems work using a measuring grid of infra-red light,” explains Torsten Sattler, another postdoc in Pollefeys’ group who is also participating in the project. In the infra-red method, the device projects a grid of infra-red light onto an object; this grid is invisible to the human eye. An infra-red camera captures the projected image of the grid and uses this to generate a three-dimensional map of the object. “This technique works well indoors,” says Sattler. But he goes on to say that it is poorly suited to outdoor shots in sunlight. This is because sunlight also contains infra-red components, which severely interfere with the measurements. “Outdoors, our method has clear advantages. Conversely, infra-red technology is better suited to indoor use in rooms whose structures are less pronounced, such as rooms with uniform, empty walls.”

The ETH scientists programmed the software for the latest version of the Project Tango mobile device. “These tablets are still in the development phase and are not yet intended for end users, but they have been available for purchase by interested software developers for a few months now, also in Switzerland. The first apps for them have already been developed; however, at the present moment the device is out of stock,” says ETH doctoral student Schöps.

A fisheye lens and rigorous quality control

Pollefeys’ working group already developed a 3D scanner for smartphones two years ago. This was intended for smaller objects. The current project allows even whole buildings to be mapped for the first time, thanks to the fisheye lens and the device’s high processing power. “In future, this could probably even be used to survey entire districts,” says Sattler.

As the researchers have found, the mapping of large objects is plagued by calculation errors in respect of the 3D coordinates. “It isn’t that easy to differentiate between correct and incorrect information,” explains Sattler. “We solved the problem by programming the software to scrupulously delete all dubious values.” Real-time feedback is essential to ensuring that the 3D model does not become a patchwork. Thanks to a preview mode the user always knows for which building areas they have collected enough information and which still require scanning.

Augmented reality

This real-time feedback is possible because, thanks to its high processing power, all of the calculations are performed directly on the tablet. This also paves the way for applications in augmented reality, says Sattler. One example is a city tour in which a tourist carries a tablet as they move around a city in real life. If they view a building ‘through’ their tablet, additional information about the building can be displayed instantly on the screen. Other potential applications include the modelling of buildings, the 3D mapping of archaeological excavations, and virtual-reality computer games.

Furthermore, the technology could be integrated into cars to allow them to automatically detect the edge of the road, for example, or the dimensions of a parking space. Accordingly, the current project has also utilised findings from the EU’s V-Charge project for the development of self-parking cars, in which Marc Pollefeys’ group was also involved.

The software now developed at ETH forms part of Google’s Project Tango. “Our software is now part of Google’s software database. Of course, we hope that Google will make our technology available to end users and include it as standard in the next version of the Tango tablet,” says Sattler. “Obviously, our dream is that some day every mobile device will include this function, allowing the development of apps that utilise it.” A large computer manufacturer recently announced its intention to put a smartphone with the Google Tango technology platform on the market this coming summer.

Reference

Schöps T, Sattler T, Häne C, Pollefeys M: 3D Modeling on the Go: Interactive 3D Reconstruction of Large-Scale Scenes on Mobile Devices. Contribution to the International Conference on 3D Vision, Lyons, 19–22 October 2015

Comments

No comments yet