AI Usage among Students

Summary of a Survey conducted among ETH Students in September 2023

Fadoua Balabdaoui, Nora Dittmann-Domenichini, Henry Grosse, Claudia Schlienger, and Gerd Kortemeyer

We report the results of a 4800-respondent survey among students at ETH Zurich regarding their usage of artificial intelligence tools, as well as their expectations and attitudes about these tools. We find that many students have come to differentiated and thoughtful views and decisions regarding the use of artificial intelligence. The majority of students wishes AI to be integrated into their studies, and several wish that the university would provide tools that are based on reliable, university-level materials. We find that acceptance of and attitudes about artificial intelligence vary across academic disciplines. We also find gender differences in the responses, which however are smaller the closer the student’s major is to informatics (computer science).

Familiarity with Tools

Students were asked how familiar they were with certain classes of AI tools. On the average, students claim medium familiarity with chat and translation tools, while on the average they claim little to no familiarity with image or presentation generating tools. There are large standard deviations on these self-assessments of familiarity, indicating a wide spectrum of experience and comfort level among the students.

In addition to general-purpose tools, such as Google Bard and ChatGPT for writing tasks (both human and computer languages), Wolfram Alpha for mathematical operations, Whisper for transcribing interviews, Grammarly for grammar checking, DeepL for language translation, and GitHub Copilot for programming tasks, they are also using more specialized tools, such as ATLAS.ti for qualitative data analysis, Rayyan for literature reviews, and Quillbot for paraphrasing. Besides Python code, students also generate Matlab code, as well as LaTeX for document typesetting and TikZ for embedding plots into LaTeX documents. Several of them remark that they train their own models, and in this context mention PyTorch and CUDA as tools, and BERT as base. In addition to these academic uses, students also state that they are using Midjourney and Elevenlabs for generating social media content, and GPT for getting ideas what to cook.

Current usage of AI in teaching and learning

Little usage in formal teaching scenarios

Only 17.2% of the students stated that they had experienced AI in a teaching situation. The free-form responses mentioned only two instances of instructors using AI for teaching purposes: one example was explicit guidance and instruction on how to use AI for computer programming, and the other was an instructor who generated explanatory essays with ChatGPT and had the students look for the mistakes (note that providing guidance for how to use AI is different from teaching about how AI works). However, the students had a wide range of ideas on how it could and should be used, as evident by their associated free-form statements (N = 1701).

Entry points to learning

Many students use it for common language tasks: overcoming initial writer’s block, as well as enhancing, translating, or correcting texts in natural languages. For example, they draft the main points they want to make in simple language or bullet points and then have ChatGPT write “nicely” formulated paragraphs in the required style. Several students mentioned also writing emails to faculty this way. Foreign students also stated they it helps them overcome language barriers.

Several students used it as a “better Google” or an “entry point” to Google. It would provide helpful and targeted first responses to specific questions for which a regular Google search only provides very general results. Details of those answer can again be looked up using Google, once that ChatGPT made it is clearer what to look for.

A large number of students used ChatGPT to summarize papers or lecture materials, and they stated that the summary is frequently better understandable than the original. This does not rely on pre-trained knowledge, but on the tool’s ability to “calculate with words.”

Bad in math, good in programming

While several students stated that ChatGPT was bad in math, and that proofs and derivations tended to be incorrect, they also stated that is is helpful in explaining proofs and derivations provided by the instructors.

Besides operating on natural language, many students highlighted its ability with computer languages and the impact on basic programming teaching and learning. Intriguingly, some students stated that they are using GPT to learn PyTorch and TensorFlow; in other words, they are using AI to learn how to build and train AI solutions.

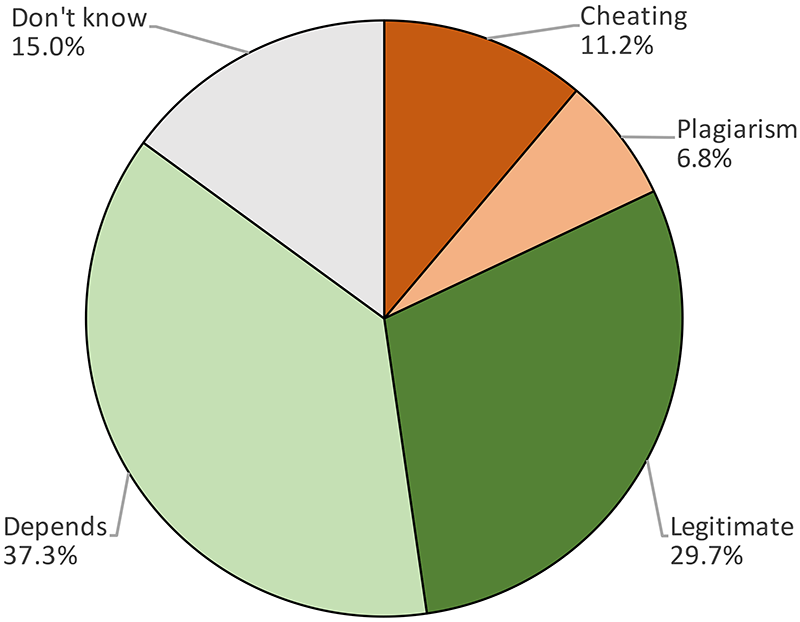

Usage of AI for exams and written assessments

Students were asked how the use of AI-based tools such as ChatGPT should currently be considered in written performance assessments, the figure shows the result. The prevalence of “depends” is not surprising, and in the associated free-form comments many students

emphasized that it depends on the rule set by the instructor. This also agrees with ETH's general policy.

Many students agreed that the use of AI-tools, like ChatGPT, is legitimate for tasks such as proofreading, correcting grammar, generating ideas, and aiding with research. Overall, students advocate context-based use and recommend clear guidelines from educational institutions

and instructors.

Trust in AI

Students are well-aware of potential trust issues surrounding AI. When asked if they had encountered any problems or concerns when using ChatGPT when it comes to accuracy, trustworthiness or bias, 80.5% stated that they had. The free-form responses mainly highlighted concerns about the accuracy, reliability, trustworthiness, and consistency of outputs from AI tools like ChatGPT. With regard to accuracy, students frequently reported.

Probably the most frequently mentioned concern, however, was around wrong calculations: wrong numerical and wrong symbolic calculations. The AI often “hallucinated” or generated wrong responses, sometimes even providing different answers for the same question. Some students remarked that ChatGPT can be so convincing about wrong answers that it makes them question and rethink concepts and sample solutions, and that in the end, they learn more.

Similarly, AI’s performance was less trusted when handling nuanced tasks or less-common topics. Bias was another issue; while bias is usually seen as a unintentional, some students may have suspected that the system was manipulated during training. Furthermore, students raised concerns about data privacy and security, affirming the need for a more transparent, reliable, unbiased, and up-to-date information system.

Attitudes towards AI

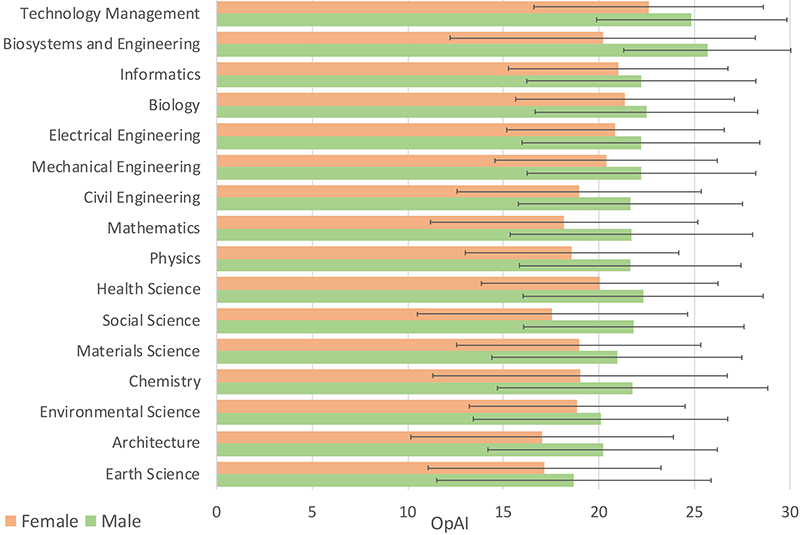

Several questions on the survey were designed to assess the students’ attitudes toward AI. With the notable exception of concerns about exclusion and discrimination, students are moderately optimistic about the use of AI. Particularly strong is the support for continued development of AI and the belief that advantages of this technology outweigh disadvantages.

The figure shows a cummulative attitude score, and how these opinions vary by discipline and gender, sorted by the overall average scores (note that gender ratios vary between programs; thus for example Biosystems and Engineering has a lower attitude score than Technology Management, even though the male score is higher). The study program on Technology Management shows the most positive attitude toward AI; the program covers topics of entrepreneurship and commercialization of technology. The wide spread of opinions, indicated by the bars, limits claims derived from the data, however, a tendency can be observed that women are more

skeptical about AI than men. Engineering and biological sciences tend to be more accepting of AI than non-engineering and system-oriented sciences, with Mathematics and Physics in the middle.

As it turns out, by adding the perceived helpfulnessof chatting as a covariate and doing an ANCOVA, some of these differences between disciplines and departments decrease; in other words, disciplinary differences in attitude might depend on how helpful AI is in that discipline. Using perceived helpfulnessof chatting as a covariant also closes some of the gender gap.

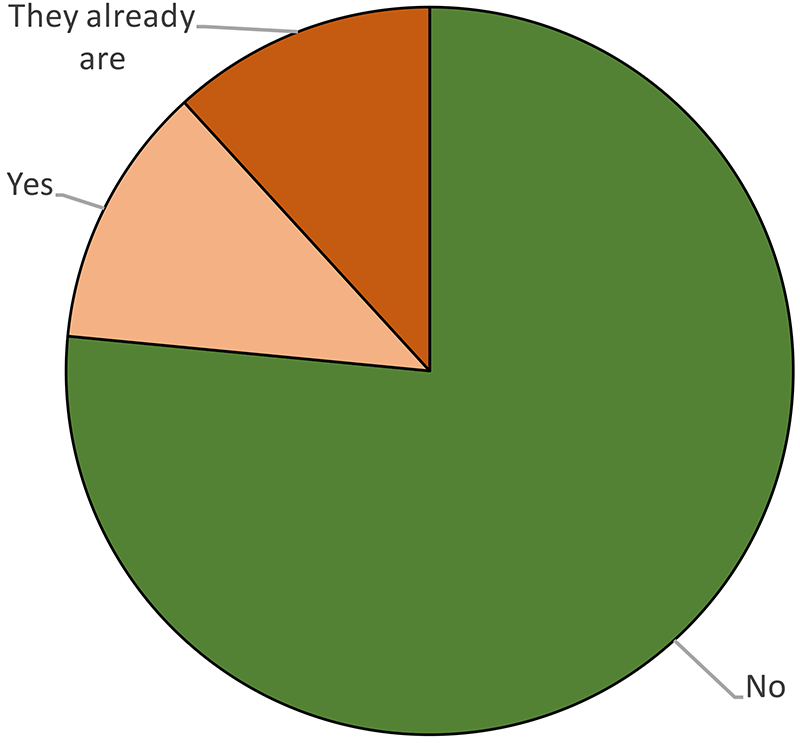

Future of Teaching

Overall, the students do not believe that current forms of teaching and assessment will be outdated any time soon. The figure shows the answer distribution of the of the question whether or not current forms of teaching are obsolete, where over 3/4th (76.6%) of the students state that current techniques will not be obsolete.

Many students express a desire for these tools to be incorporated into their learning experience, believing that AI could enhance their academic and professional lives. This goes along with their answer to the question if ETH should offer learning opportunities to promote the use and application of AI-based tools in their studies (≈ 2/3rd, 65% answering “yes”); the question was certainly formulated exuberantly (“promote the use”), but students have a realistic view on the buzz and hype around AI: on a scale of exaggerated (1) to appropriate (5), they rated the buzz around AI 3.2 ± 1.1.

While several students suggest getting a campus license for GPT-4, some students suggest creating an institutional AI which provides trustworthy and targeted learning support for particular courses.

Conclusions

The survey results provide insight into students’ familiarity with, usage of, and attitudes towards artificial intelligence (AI) tools in an academic setting. We found a disparity in familiarity, which underscores the diverse range of experiences and comfort levels among students. While only a minority have experienced AI in formal teaching situations, they have a plethora of ideas on its potential applications, especially in programming and language-related tasks. The sentiment towards AI is generally optimistic, with students recognizing its potential benefits in enhancing their academic and professional endeavors. However, concerns about trustworthiness, accuracy, and potential biases in AI outputs are prevalent. The majority believe that while AI tools can be beneficial supplements and should be integrated into teaching scenarios, they should not replace traditional learning methods.

Furthermore, the responses suggest a nuanced perspective on the use of AI in assessments. While many students deem AI tools legitimate for tasks like proofreading and idea generation, there’s a consensus that primary content should originate from the student, with verbatim copying from AI outputs considered unethical. The need for transparency and clear guidelines from educational institutions is emphasized, with students advocating for a context-based use of AI. Despite the potential advantages, there are concerns about over-reliance on AI, data privacy issues, and the challenge of distinguishing AI-generated work. Several students asked for institution-provided AI tools to overcome these concerns.