Robots for comfort and counsel

From robots that offer solace to algorithms that help judges make fact-based decisions, robotics and machine learning are entering new domains that were once the preserve of humans.

Five years ago, while studying in Pennsylvania for a Master’s degree in robotics, Alexis E. Block was asked to choose a topic for her thesis. Almost right away, she said she wanted to develop a robot that would hug and comfort her. Block’s father had recently passed away, and her mother lived in Wisconsin, a two-and-a-half-hour flight away. With millions of people living far from their loved ones, she figured she wasn’t the only one craving physical comfort. Wouldn’t it be marvellous, Block thought, if we could at least send a hug to the people we love and miss so much? And it could make a real difference: it is well established that human hugs and physical contact reduce blood pressure, alleviate stress and anxiety, and boost the immune system.

Six commandments for robot hugs

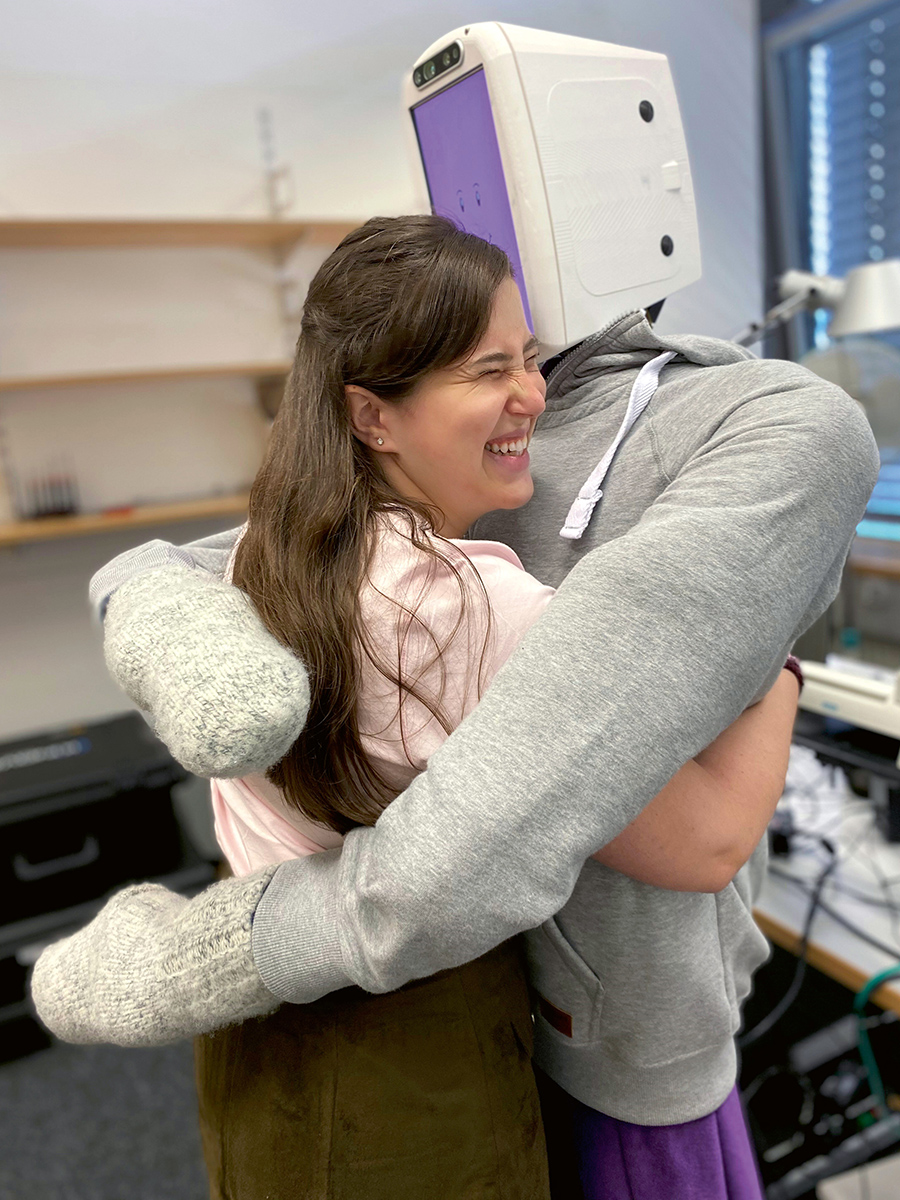

Today, Alexis E. Block is a doctoral student at the Max Planck ETH Center for Learning Systems. Dividing her time between Stuttgart and Zurich, she is continuing to develop and improve her hugging robot, or HuggieBot. “Our work is based on our six design tenets, or ‘commandments’, for natural and enjoyable robot hugging,” says Block. “A hugging robot should be soft, warm and human-sized. It should be able to visually perceive the person it is hugging, adjust its embrace to the user’s size and position, and always let go when the person wants to end the hug.” Block therefore opted to clad the upper body of her HuggieBot with heating and softening elements, including a custom inflatable torso. Sensors in the torso measure the pressure applied by the person being hugged, detecting when the user starts and stops hugging the robot. Meanwhile, torque sensors in the arm measure how tightly the robot is hugging. Using a 3D printer, Block produced a head with a built-in screen that displays animated faces. This enables the robot to laugh and wink while simultaneously, through a built-in depth-sensing camera, detecting the distance and movements of the person being hugged and responding accordingly.

Robotics is increasingly focusing on the use of soft, organic-like, “bio-inspired” materials. This throws up challenges for other disciplines such as materials science and is also attracting the attention of educators. From February to June of this year, the Competence Center for Materials and Processes organised a series of lectures on soft robotics. Alongside presentations by renowned researchers from Stanford, Yale, Harvard and MIT, there were also talks by experts from the ETH Domain. The Competence Center will be opening a doctoral school this summer, with these kinds of bio-inspired systems as one of its five focal points.

Particularly appealing to introverts

In 2020, Block tested the second version of the robot, HuggieBot 2.0, in the lab. A total of 32 test subjects were hugged by the robot and asked to share their experiences. “It was fascinating,” says Block. “Some of the hugs lasted so long that I actually began to get nervous!” Several subjects told her the firm hug was just what they needed. She noticed that HuggieBot 2.0 offered particular benefits to introverted people because they no longer worried about making an odd impression if they wanted a hug to last longer. Once the experiment was over, she also found that the study participants had a significantly more positive attitude towards robots and their introduction into everyday life.

Block has since developed HuggieBot 3.0, which will be able to detect and classify intra-hug gestures, such as rubs, pats and squeezes, and respond appropriately, and an even more advanced model – HuggieBot 4.0. This should help to gradually make robot hugs more similar to human ones. Block’s team is also working on an app which will allow users to remotely “send” hugs via HuggieBot. While passing on a loved one’s hug, HuggieBot can simultaneously play video or audio messages from the sender via its digital interface. “I don’t believe robot hugs will ever be able to completely replace human hugs, however much progress we make,” says Block emphatically. “But what robots can do is alleviate loneliness and perhaps even improve people’s mental health in situations where physical contact is made impossible by distance or illness.” Block can already see possible applications in hospitals, care homes and, of course, universities.

Combating prejudices with algorithms

Elliott Ash, Assistant Professor at the Department of Humanities, Social and Political Sciences, is similarly cautious about the potential for robotic systems to become a regular part of our daily lives: “Robots will never replace judges in legal proceedings, but they will increasingly be able to support them.” Ash develops virtual assistants that make it easier for judges to base their decisions on previous legal rulings and minimise the impact of their prejudices. Studies from the US, for example, show that darker-skinned defendants are typically sentenced to longer prison terms for the same offence and are less likely to be released on bail. In San Francisco, some judges approve almost 90 percent of asylum applications, while others only approve 3 percent. This situation is complicated further by the daunting backlog of court cases. All too often, judges struggle to keep up with their caseload and are too pressed for time to conduct extensive research. A virtual assistant that is able to analyse all the precedents in a matter of seconds and channel those findings into suggestions for the case at hand could greatly improve the quality of judgements. In future, the use of big data, machine learning and decision theory could also enable the inclusion of sound recordings, photographs and surveillance images in the decision-making process. At the same time, Ash is using machine learning to shed light on the legal system itself. He recently joined forces with the World Bank to investigate whether the under-representation of women and Muslims as judges in Indian courts leads to bias in court rulings. Previous studies had already indicated that judges tend to favour their own gender and religion.

Ash and his colleagues developed a neural network to retrieve female and Muslim names from more than 80 million publicly available court documents filed by over 80,000 judges over the period between 2010 and 2018. They then deployed an algorithm to search for correlations between names and rulings. The researchers found no statistically significant discrimination in sentencing, though Ash is quick to point out that this does not mean India’s legal system is free from prejudice. Discrimination can also be applied by police or prosecutors, he says: “Our results will help politicians decide where they can most effectively tackle discrimination.” Ash also worked in Brazil, where he used freely available budgets and audit data from hundreds of municipalities to train an algorithm to spot anomalies. Compared to the previous method, in which auditors visited a random selection of municipalities each year, the use of the algorithm led to the detection of twice as many cases of corruption.

Yet as soon as machine learning is introduced into sensitive areas such as law, questions are bound to be asked about the fairness and ethics of the algorithms on which it is based. For example, how do we stop the same prejudices that shape our world from being programmed into an algorithm in exactly the same form? “Algorithms shouldn’t be black boxes,” says Ash. “They should be accessible to all, not for profit and under democratic control.” He envisions a kind of Wikipedia of algorithms that gives everyone an insight into the codes employed in the realm of public administration.

Can robots make us happier?

Robotics expert Alexis E. Block believes that the COVID-19 pandemic and the need to maintain physical distance has changed our attitudes towards robots. “People used to laugh at me at conferences because they thought HuggieBot was a silly idea,” she says. “But now I don’t have to explain to anyone why hugs are so important and why we’re developing these kinds of systems.” She is currently collaborating with a psychologist to prove scientifically whether being hugged by HuggieBot 4.0, the latest model of the hugging robot, can alleviate stress and produce feelings of happiness – just as human hugs do. Under lab conditions, the 52 test subjects are exposed to mild stress and then hugged by a person or a robot, or not hugged at all. Their heart rate is measured and samples are taken of their saliva to measure oxytocin levels, which are correlated with positive emotions like those in social bonding, and cortisol levels, which indicate stress. Whatever the results, Block will continue to look forward to a hug with HuggieBot at the end of a long day because, as she says, “It just feels fantastic when it hugs me!”

This text has been published in the 21/02 issue of the Globe magazine.

About

Alexis E. Block is currently a doctoral student at the Center for Learning Systems, a joint programme of the Max Planck Institute for Intelligent Systems and ETH Zurich.

Elliott Ash is Assistant Professor in the Department of Humanities, Social and Political Sciences and head of the Law, Economics and Data Science Group.

Comments

No comments yet